We’ve all seen the impressive AI agent demos. But when it’s time to move from a demo to a mission-critical production system, one that manages a factory floor or prevents a multi-million dollar machine from going down, a painful gap emerges. Projects stall on reliability, observability, and the plain old trust required to hand over the keys to a business process.

This isn’t a technical problem; it’s a business continuity risk. The market has plenty of clever demos; it lacks systems that behave like production-grade industrial software.

We distilled two years of building enterprise agentic solutions into ten design rules of our cognitive agentic architecture. Follow them and your agents behave like industrial software, not science-fair chatbots.

The 10 Principles of cognitive agentic architecture

| # | Principle | Sound-bite |

|---|---|---|

| 1 | Small, Focused Agents | One agent, one job. |

| 2 | Separation of Concerns | Prompt ≠ tool ≠ state. |

| 3 | Explicit Control Flow | Plans are data, not magic. |

| 4 | Structured Context | Garbage in, garbage out. |

| 5 | Prompt = Code | Version. Test. Roll back. |

| 6 | Tools as Contracts | Typed I/O. Predictable behavior. |

| 7 | Observable Everything | If you can’t trace it, you can’t trust it. |

| 8 | State is Explicit | Resumable, not restartable. |

| 9 | Composable Error Handling | Retry, fallback, escalate. By design. |

| 10 | Human Collaboration by Design | Autonomy is earned, not assumed. |

(Scroll on for the “why” behind each rule.)

Principle Deep-Dive

Each principle is a rule we learned by deploying agents in high-stakes industrial environments. Here’s the “why” behind each one, and the business impact it delivers.

1. Small, Focused Agents

-

The Principle: Do one thing and do it well. An agent responsible for “categorizing a ticket” should not also be responsible for “querying a spare parts database.” Scoped agents are predictable, testable, and reusable. They combine like microservices, not like spaghetti prompts.

-

Business Impact: Your automation is built from reliable, interchangeable parts, not a fragile monolith. When a single component needs an update, you modify one agent, not rewrite the entire system. This means lower maintenance costs and faster adaptation to new business requirements.

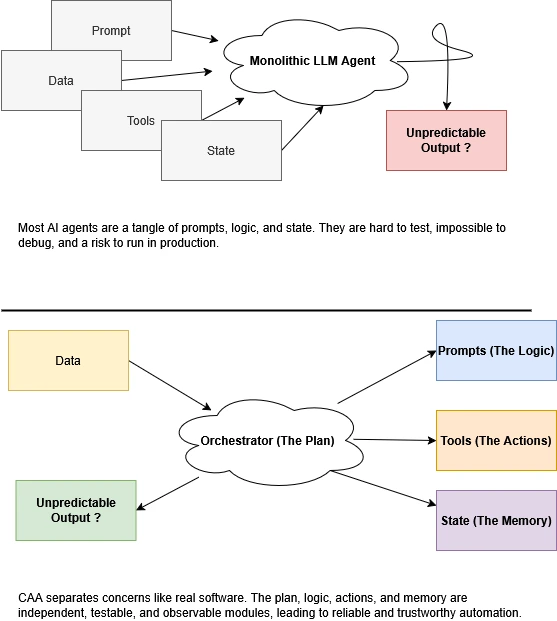

2. Separation of Concerns

-

The Principle: Prompting, tool logic, execution routing, and state must live in different, independent modules. When something breaks, you know precisely where to look.

-

Business Impact: Faster, cheaper debugging. Instead of a multi-day “AI investigation,” your team can pinpoint the failure in minutes. This is the difference between a minor operational hiccup and a system-wide outage.

3. Explicit Control Flow

-

The Principle: The overall business process is defined by a real workflow engine (like a state machine or task graph), not an LLM’s ad-hoc decision-making. The plan is data you can see and audit, not magic.

-

Business Impact: Your core business process is explicitly designed, documented, and auditable. It behaves like an SOP, not a black box. You can prove to a customer, regulator, or your CEO that your automated process follows the approved steps, every single time.

4. Structured Context

-

The Principle: The “context” passed to an agent is a typed, versioned data structure, not a messy blob of text. It’s validated before it’s used.

-

Business Impact: Garbage in, garbage out is no longer a risk. This prevents an entire class of errors where the AI hallucinates or misinterprets poorly formatted data, protecting the integrity of your downstream processes.

5. Prompt = Code

-

The Principle: Prompts are treated with the same discipline as production code. They ship through a CI/CD pipeline, have unit tests, are version-controlled, and can be rolled back cleanly.

-

Business Impact: You eliminate the risk of an intern “improving” a prompt and accidentally taking down a critical workflow. Changes to your business logic are deliberate, tested, and safe.

6. Tools as Contracts

-

The Principle: Every tool an agent can use is treated like a formal API. It has a defined input schema and a guaranteed output schema.

-

Business Impact: Predictability and stability. The agent’s interactions with your other systems (like your ERP or CRM) are reliable and won’t cause unexpected downstream failures.

7. Observable Everything

-

The Principle: If you can’t trace it, you can’t trust it. Every step, every decision, every tool call for every agent run is logged to an observability platform.

-

Business Impact: Complete auditability and trust. When a critical process fails, you have an irrefutable forensic trail to prove why. This is essential for regulatory compliance, customer accountability, and internal quality control.

8. State is Explicit

-

The Principle: Agents checkpoint their state after every significant action. If a server crashes mid-process, the agent can resume from the last successful step, not restart from zero.

-

Business Impact: High reliability and fault tolerance. A transient network error doesn’t force you to scrap a multi-hour automated process. The system is resilient by design, just like any other piece of critical factory infrastructure.

9. Composable Error Handling

-

The Principle: The system knows what to do when things go wrong. The execution plan explicitly defines rules for retrying transient faults, falling back to a simpler tool, or escalating to a human expert.

-

Business Impact: The system degrades gracefully instead of failing catastrophically. It automatically handles common issues and only involves your expensive human experts when their judgment is truly required, maximizing their efficiency.

10. Human Collaboration by Design

-

The Principle: The system assumes human oversight is required for high-stakes decisions. Approval buttons, audit trails, and Slack “Approve / Reject” cards are built-in from day one. Full autonomy is a feature that must be explicitly earned and enabled for a process, not the default.

-

Business Impact: This builds trust and allows your experts to safely delegate, knowing they hold the final veto on critical actions. It enables your team to adopt AI as a powerful apprentice, not an unpredictable replacement they have to constantly second-guess.

What This Looks Like in Practice

From Magic to Machine

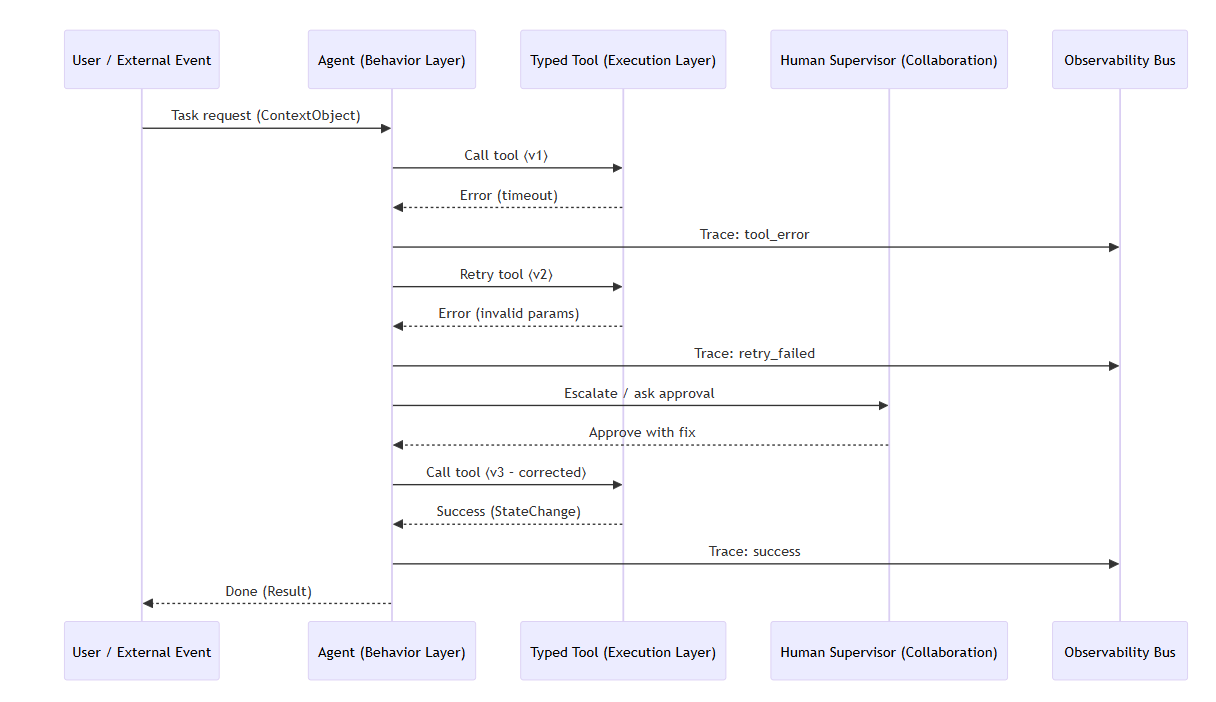

Execution Flow: Retry → Escalate → Resume

- ContextObject → structured, typed input from Context Layer

- Tool 〈v⟩ → versioned, contract‑driven API

- Trace events flow into Observability for replay & metrics

This diagram demonstrates explicit control flow, structured retries, human‑in‑the‑loop escalation, and full observability — core tenets of CAA.

From Principles to Code

We’ve open-sourced the Arti Agent Stack, our opinionated implementation of CAA:

- 5-Layer architecture

- Reference Python modules for context building, behavior routing, and state storage [coming soon]

- Observability hooks compatible with OpenTelemetry / Langfuse [coming soon]

- Examples from industrial pilots (machine-log triage, multi-channel ticket automation) [coming soon]

➡️ GitHub: github.com/artiquare/cognitive-agentic-architecture

(Repo includes markdown docs for each principle if readers want to go deeper.)

Why Should You Care?

If your AI strategy is just “Let’s build a chatbot,” these principles may feel like overkill.

But if your strategy involves AI agents running critical business processes, managing factory floors, triaging high-stakes support tickets, or ensuring regulatory compliance, then these principles aren’t just best practices. They are the difference between a successful deployment and a costly failure.

Next steps for cognitive agentic architecture

Coming up: “How Trade Republic’s LLM Ops Team Arrived at Similar Conclusions”, a field-study showing convergent evolution toward typed context, observability, and HITL loops.

If your AI strategy involves running critical business processes, managing factory floors, or triaging high-stakes operations tickets, these principles aren’t just best practices. They are the difference between a successful deployment and a costly failure.

Our work is built on this foundation. Here is how you can engage:

-

Start a Pilot: We are currently onboarding a limited number of partners in the industrial automation and machinery sectors to solve the “expert knowledge drain” problem using this production-grade architecture.

-

Discuss Your Use-Case: Not ready for a pilot? Open an issue in our GitHub to discuss your hardest production challenge. Let’s talk architecture.

-

Explore the Code: For the technical leaders on your team, the Arti Agent Stack is open-source. We believe in transparency and software-grade discipline for AI.

Let’s move AI from clever conversation to reliable execution — one principle at a time