The past year has been a pivotal one for generative AI, especially in the world of open-source Large Language Models (LLMs). At ArtiQuare, we’ve been right in the thick of it, adapting these powerful LLMs for practical, business-focused use.

Our work mainly involves creating systems that help businesses use LLMs for automating tasks, researching, and creating new knowledge from their own data. This journey has led us to focus heavily on Retrieval-Augmented Generation (RAG) systems. RAG has become a key player in AI, thanks to its ability to combine the creative power of LLMs with specific, relevant information from businesses.

The shift towards RAG wasn’t unexpected. While LLMs initially made waves for their ability to chat and interact, it soon became clear that their true value lies in solving real business problems. RAG systems have been at the forefront of this shift, proving to be incredibly useful for businesses looking to get more out of AI.

As we move forward into 2024, RAG’s role in business AI solutions is only set to grow. Reflecting on the broader context of AI adoption, the latest PwC’s annual global CEO survey sheds light on the evolving perspective of business leaders towards generative AI. While the integration of these technologies has been gradual, CEOs are increasingly recognizing the opportunities they present. With 60% of survey respondents viewing generative AI as an opportunity rather than a risk, there’s a clear anticipation of a more significant impact in the near future. Furthermore, over the next three years, a substantial 70% of CEOs foresee generative AI not only heightening competition but also catalyzing changes in business models and necessitating new workforce skills.

This forward-looking outlook aligns perfectly with our focus on adapting Large Language Models (LLMs) for enterprise use. As we delve into this topic, let’s take a moment to recap the significant strides made in generative AI in 2023.

Recap of Generative AI Developments in 2023

2023 marked a year of rapid progress in the field of generative AI, characterized by significant advancements and an expanding landscape of open-source tools and projects. The pace of development in Large Language Models (LLMs) was particularly noteworthy, reflecting a broader trend of continuous improvement and innovation.

Key Developments in LLMs and Ecosystems:

- Advancements in Model Capabilities: LLMs have grown not only in size but in sophistication, showcasing improved understanding and generation abilities. These enhancements have opened new doors for practical applications across various industries.

- Growth of Open-Source Projects: The year saw an uptick in open-source projects around LLMs, driven by a community-focused approach to development. This trend has democratized access to cutting-edge AI technologies, enabling a wider range of developers and businesses to experiment and innovate.

- Ecosystem Expansion: Alongside the models themselves, there’s been a surge in the tools and platforms that support LLMs. From data processing utilities to user-friendly interfaces, the ecosystem surrounding LLMs has become richer and more conducive to practical application.

- Rise of Multimodal AI: The emergence and growth of multimodal AI marked a significant stride. These systems, capable of processing and integrating multiple types of data (like text, images, and audio), showed great potential in creating more versatile and context-aware AI solutions.

Having looked at the broader industry trends, we now turn our attention to how these developments are playing out within the enterprise sector.

Adaptation of LLMs in Enterprises:

The adaptation of LLMs within enterprises has been a standout trend. Businesses have increasingly recognized the potential of these models in automating processes, aiding research, and generating new knowledge. In our work, adapting LLMs often meant constructing RAG systems that could leverage company-specific data effectively, making AI tools not just powerful but also relevant and practical for enterprise needs.

The rise of RAG as a dominant architectural choice was aligned with a growing demand for AI solutions that were business-focused. Unlike the early days of LLMs, where the excitement was mostly around their ability to simulate conversation, the focus has now shifted towards leveraging these models for deeper, more substantive enterprise applications.

As we reflect on the year gone by, it’s clear that the journey with generative AI and RAG systems is just getting started. The advancements in 2023 have set the stage for even more innovative and impactful applications in the year ahead.

Let’s explore how these advanced AI tools can be tailored to meet specific, complex business needs, unlocking new avenues for innovation and competitive edge.

Focus on LLM Adaptation for Enterprise Use

In the dynamic landscape of generative AI, one of the most significant trends has been the adaptation of Large Language Models (LLMs) by enterprises for their proprietary and internal data. This move towards customizing LLMs is driven by the need to leverage AI for specific, often complex, business needs.

1. Why Enterprises are Turning to LLM Adaptation:

- Businesses are seeking to harness the power of LLMs to process, analyze, and generate insights from their unique data repositories.

- The goal is to create AI solutions that are not just powerful in terms of raw computational ability but are finely tuned to align with specific enterprise contexts and requirements.

2. Main Techniques in LLM Adaptation:

- Fine-Tuning: Tailoring pre-trained models to better fit specific data or tasks, although this can be resource-intensive.

- Prompt Engineering: Designing prompts that effectively guide the model to produce desired outputs, a technique that has seen widespread use due to its efficiency and simplicity.

- Retrieval-Augmented Generation (RAG): A technique that combines the generative capabilities of LLMs with external data retrieval, particularly suitable for tasks that require a combination of general knowledge and specific, domain-relevant information.

3. Our Experiences with LLM Adaptation:

- We’ve worked extensively with these techniques, applying them to various projects and observing firsthand their potential and limitations.

- Our journey has highlighted that while fine-tuning offers precision, it’s often prompt engineering and RAG that provide the most cost-effective and versatile solutions for enterprise applications.

4. The Growing Importance of Customized AI Solutions:

- This trend towards LLM adaptation underlines a broader shift in the AI industry: from one-size-fits-all models to bespoke solutions that cater to the unique challenges and opportunities of individual businesses.

Our experience with various client projects and AI challenges have not only sharpened our skills but also shown us how RAG is changing the game for businesses. Moving beyond the initial excitement around AI conversations, RAG is now a tool for serious, impactful business applications. As we consider the practical applications of these advancements, the evolution of RAG systems emerges as a key area of focus.

The Rise and Evolution of Retrieval-Augmented Generation Systems

Understanding the Popularity of RAG Systems

As 2023 unfolded, Retrieval-Augmented Generation (RAG) systems increasingly became the architecture of choice for many AI applications, particularly in enterprise settings. This trend was driven by a clear recognition of their potential to provide more focused and relevant AI-powered solutions.

RAG Systems in the Limelight:

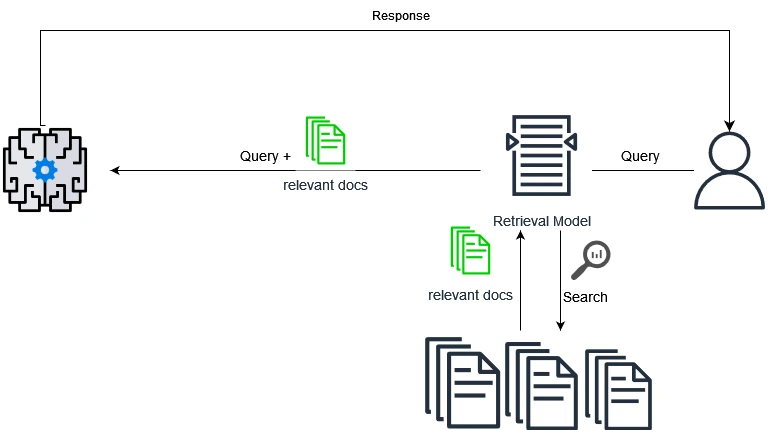

- RAG systems, which combine the generative prowess of LLMs with the ability to pull in specific, relevant information, proved ideal for various business applications.

- Their ability to reference and utilize external data sources made them particularly appealing for tasks requiring depth and specificity, surpassing the capabilities of standalone conversational models.

- The concept of ‘Naive RAG’ – simply vectorizing documents and querying them with LLMs – gained initial traction for its simplicity. However, its limitations became apparent in complex scenarios involving large datasets and sophisticated queries.

To fully grasp the challenges and limitations of naive RAG systems, it’s essential first to understand how they function at a basic level. The naive RAG process can be divided into three key phases: retrieval, augmentation, and generation.

1. Retrieval Phase:

- Description: In this phase, the RAG system searches through a database or a set of documents to find information relevant to a given query. This is typically done using vector similarity search, where the query and documents are converted into vectors (numerical representations) and compared.

- Limitation: The retrieval phase in naive RAG systems often relies on simplistic matching techniques, which can result in the retrieval of irrelevant or superficial information, especially for complex queries.

2. Augmentation Phase:

- Description: The retrieved information is then processed and prepared to augment the response generation. This might involve summarizing or contextualizing the data.

- Limitation: Naive RAG systems may struggle to properly contextualize or synthesize the retrieved data, leading to augmentation that lacks depth or fails to accurately address the query’s nuances.

3. Generation Phase:

- Description: Finally, the augmented data is fed into a language model, which generates a response based on both the original query and the additional context provided by the retrieved data.

- Limitation: If the retrieved data is flawed or the augmentation inadequate, the generation phase can produce responses that are misleading, incomplete, or contextually off-target.

Understanding the structure of RAG systems also requires an examination of their limitations, particularly in their more basic or ‘naive’ forms.

In-depth Look at Limitations and Problems of Naive RAG

Naive RAG systems, while innovative in their approach to combining retrieval with generative models, often fall short in delivering precise and contextually relevant results, especially in complex enterprise scenarios.

- Retrieval Issues: Problems in the retrieval phase, such as inaccurate or shallow data fetching, set a weak foundation for the entire process.

- Augmentation Challenges: Inadequate augmentation can fail to bridge the gap between raw data and the required contextual understanding for accurate response generation.

- Generation Shortcomings: The final output can be compromised by earlier failures, leading to responses that don’t truly meet the user’s needs or expectations.

Retrieval Challenges in Basic RAG Systems

When using naive RAG systems in a business setting, it’s crucial to understand where they might fall short, particularly in the initial retrieval phase. This stage is all about how the system finds and uses information to answer a query. Let’s break down these challenges in simpler, management-friendly terms:

1. Confusing Different Meanings:

- What Happens: The system might get confused by words with more than one meaning (like “apple” as a fruit or a company) and fetch wrong information.

- Business Impact: You end up with answers that are off-topic, which can be misleading or unhelpful.

2. Matching Based on Wrong Criteria:

- What Happens: The system sometimes matches based on broad similarities, missing out on the specifics of what you’re really asking for (like matching the right phrase “Retrieval-Augmented Generation (RAG)” in the wrong document).

- Business Impact: The answers you get might seem related at a glance but don’t actually address your specific query.

3. Difficulty in Finding Close Matches:

- What Happens: In a large pool of data, the system might struggle to distinguish between closely related topics, leading to less accurate matches.

- Business Impact: You receive answers that seem right but are actually unrelated to your query.

4. Missing the Context:

- What Happens: The system often misses out on the finer, contextual details of a query, focusing only on the broader picture.

- Business Impact: The lack of nuanced understanding results in answers that don’t fully capture the query’s intent.

5. Challenges with Niche Topics:

- What Happens: For very specific or niche queries, the system might fail to gather all the relevant pieces of information spread across different sources.

- Business Impact: This can be particularly problematic when dealing with specialized or technical queries, leading to incomplete or surface-level answers.

Augmentation Challenges reduce Coherence and Relevance

After the retrieval phase in a naive RAG system, the augmentation phase poses its own set of challenges. This is where the system tries to merge the retrieved information into a coherent and relevant response. Let’s explore these issues and their implications for business applications:

1. Integrating Context Smoothly:

- Challenge: The system might struggle to blend the context of retrieved data with the generation task, leading to disjointed outputs.

- Example: Mixing detailed history with current applications of “Artificial Intelligence” might result in an unbalanced emphasis, neglecting the core focus of the task.

2. Redundancy and Repetition:

- Challenge: Repetition can occur when multiple sources provide similar information, leading to redundant content in the output.

- Example: Repeated mentions of “Retrieval Augemented Generation” can make the response seem repetitive, reducing its effectiveness.

3. Prioritizing Relevant Information:

- Challenge: Effectively ranking the importance of different pieces of retrieved information is tricky but essential for accurate output.

- Example: Underemphasizing crucial points like “search index” in favor of less critical information can distort the response’s relevance.

4. Harmonizing Diverse Styles and Tones:

- Challenge: Retrieved content might have varying styles or tones, and the system must harmonize these for a consistent output.

- Example: A mix of casual and formal tones in the source material can lead to an inconsistent style in the generated response.

Complexities in the Generation Phase of Retrieval-Augmented Generation

The final phase in a naive RAG system, generation, presents its own set of challenges. This is where the system synthesizes the retrieved and augmented data to produce a final response. Let’s unpack the common issues encountered in this phase and their implications.

1. Coherence and Consistency:

- Challenge: Maintaining logical coherence and narrative consistency, especially when integrating diverse information, is difficult.

- Example: An abrupt shift from discussing Python in machine learning to web development without transition can confuse readers.

2. Over-generalization:

- Challenge: The model may provide generic responses instead of specific, detailed information.

- Example: A broad answer to a query about the differences between PyTorch and TensorFlow fails to address the query’s specifics.

3. Error Propagation from Retrieval:

- Challenge: Any inaccuracies or biases in the retrieved data can be amplified in the final output.

- Example: An incorrect claim in retrieved content (e.g., “LlamaIndex is a ML Model Serving Framework”) can lead the generation phase astray.

4. Failure to Address Contradictions:

- Challenge: Contradictory information in retrieved data can confuse the generation process.

- Example: Conflicting statements about PyTorch’s use in research versus production without clarification can mislead readers.

5. Context Ignorance:

- Challenge: The model might overlook or misinterpret the broader context or intent of a query.

- Example: Responding to a request for a fun fact about machine learning with a highly technical point.

Given these identified challenges with naive RAG systems, it becomes clear why there’s an increasing shift towards more sophisticated, advanced RAG solutions.

The Need for Advanced Retrieval-Augmented Generation (RAG)

As we conclude our exploration of the basic or naive RAG systems, it becomes evident that while these systems represent a significant step forward in AI, they are not without their limitations. The challenges in retrieval, augmentation, and generation phases often hinder their effectiveness, especially in complex, real-world enterprise applications. These limitations can be summarized as follows:

- Retrieval Issues: Problems with semantic ambiguity, granularity mismatch, and global vs. local similarities leading to inaccurate or irrelevant data fetching.

- Augmentation Challenges: Difficulties in integrating context, handling redundancy, and prioritizing information, resulting in disjointed or superficial content.

- Generation Shortcomings: Issues with coherence, consistency, verbosity, and a lack of depth, leading to responses that fail to meet the nuanced demands of business queries.

These challenges underscore the need for a more sophisticated approach – the realm of Advanced RAG Systems. Advanced RAG addresses these limitations head-on, incorporating more refined techniques and methodologies to ensure higher accuracy, relevancy, and contextual alignment of the generated content.

Introducing the Series on Advanced RAG Techniques: In light of these challenges, we are excited to announce an upcoming series that will delve into the world of advanced RAG systems. This series will explore:

- Enhanced Retrieval Mechanisms: How advanced RAG systems achieve more precise and contextually relevant data retrieval.

- Sophisticated Augmentation Strategies: Techniques for better integrating and synthesizing retrieved information.

- Refined Generation Processes: Ways to ensure coherence, depth, and insightfulness in the final generated content.

Advanced RAG systems represent the cutting edge of AI’s capabilities in processing and generating knowledge. They hold the promise of transforming how businesses interact with and leverage AI, moving beyond the limitations of basic RAG to offer solutions that are not just technically advanced, but also highly tailored and effective for specific business needs.

Stay tuned as we embark on this exciting journey into the advanced RAG systems, unraveling their potential to revolutionize industries and redefine the future of generative AI.